Reinforcement Learning (RL) is a branch of machine learning where agents learn to make decisions by interacting with an environment. One of the complexities in RL is dealing with delayed rewards, where the consequences of actions taken by an agent are not immediately evident but manifest over time. This scenario poses unique challenges and necessitates specific strategies for effective learning and decision-making.

Understanding Delayed Rewards

Delayed rewards occur when there is a significant lag between an agent’s actions and the resulting rewards. This is common in many real-world scenarios such as financial investments, strategic games like chess, or even ecological management, where the impact of decisions unfolds over an extended period.

Challenges Posed by Delayed Rewards

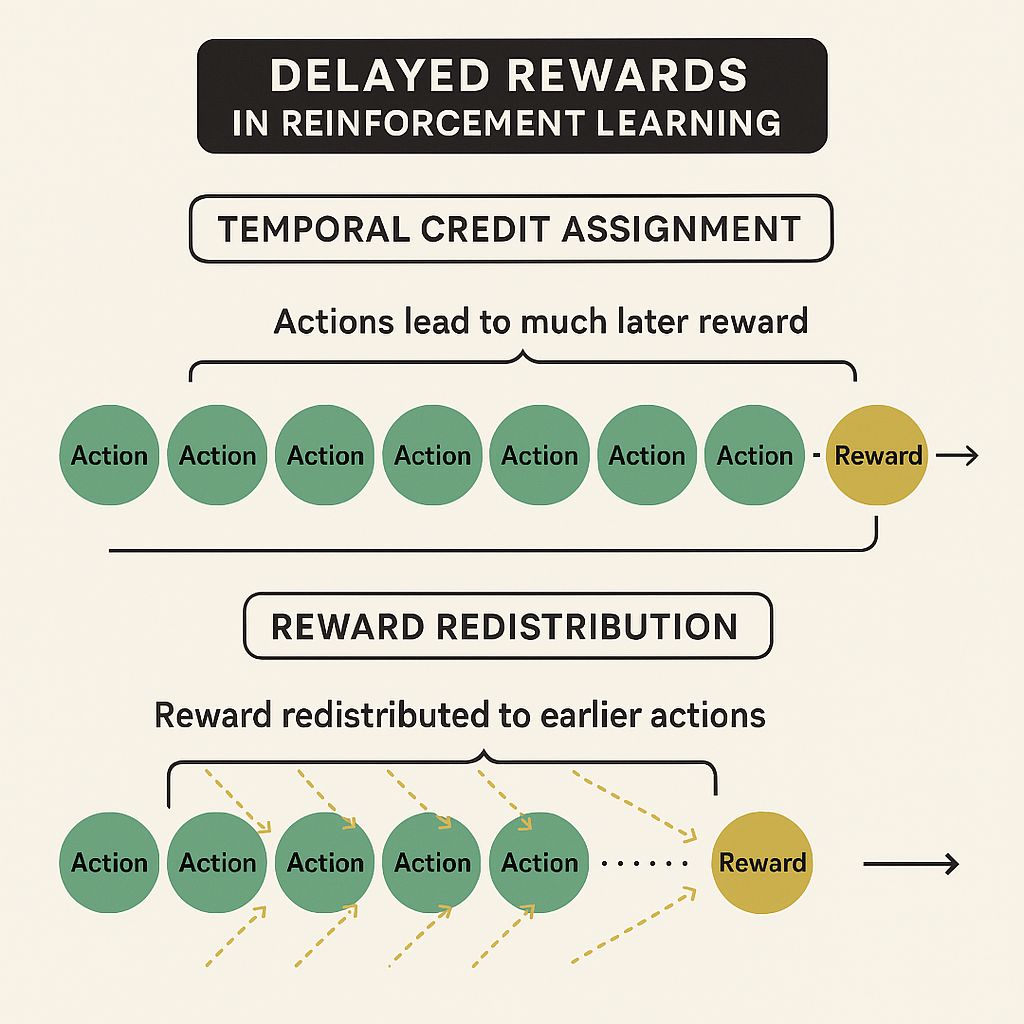

- Temporal Credit Assignment: The primary challenge with delayed rewards is determining which actions are responsible for future outcomes. This problem complicates the learning process, as the agent must learn to associate actions with outcomes that are temporally distant.

- Complex State Dependencies: Delayed rewards often lead to environments that are non-Markovian, where future states depend on more than just the current state and action. This breaks the Markov Decision Process (MDP) framework that standard RL algorithms assume.

Strategies to Handle Delayed Rewards

Reward Redistribution

Methods like RUDDER rearrange the rewards within an episode to align more closely with the causative actions. This redistribution helps clarify the learning signals and enhances the agent’s ability to attribute outcomes to specific behaviors more accurately.

Modified Learning Algorithms

Some approaches involve developing new algorithms or modifying existing ones to better accommodate the intricacies of delayed rewards. These adaptations may include extending the action-reward analysis over longer sequences or employing more complex predictive models.

Enhanced Policy Learning

Improving the policy framework to effectively handle delayed rewards is crucial. Techniques such as integrating memory components or utilizing more sophisticated policy networks help maintain information over extended periods, aiding in decision-making that accounts for delayed outcomes.

Practical Applications and Implications

Delayed rewards are prevalent in many areas where decisions have long-term impacts. For example:

- Autonomous Driving: Decisions made by an autonomous vehicle have consequences that manifest over time, such as the route taken or maneuvers in response to traffic conditions.

- Healthcare Management: In medical treatment planning, actions (treatments) have outcomes that are realized in the future, influencing the long-term health of patients.

Future Directions and Research

The field of RL with delayed rewards continues to evolve, with research focusing on more robust methods for handling the inherent uncertainties and complexities. Future work may explore hybrid models that combine elements of immediate and delayed reward structures to optimize learning across diverse environments.

Conclusion

Handling delayed rewards in reinforcement learning is a critical challenge that mirrors many real-world decision-making scenarios. By developing and employing strategies that can effectively address these delays, reinforcement learning becomes more applicable and powerful in solving complex, long-term planning problems. Continued advancements in this area promise to enhance the capabilities of autonomous systems, leading to smarter, more responsive technologies.

For those interested in a deeper dive into the technical aspects and various implementations, exploring dedicated research articles and studies provides comprehensive insights and greater detail on handling delayed rewards in reinforcement learning.

Why are delayed rewards a challenge in reinforcement learning?

Delayed rewards complicate the learning process because they require the agent to associate actions with outcomes that are not immediately observable. This delay disrupts the direct feedback loop that is crucial for standard reinforcement learning algorithms.

How are delayed rewards managed in reinforcement learning?

Techniques like reward redistribution and advanced algorithm modifications are used to manage delayed rewards. These methods help in attributing long-term outcomes back to the actions that caused them, improving the agent’s ability to learn effective strategies.

What is reward redistribution?

Reward redistribution involves rearranging the rewards within an episode to better align with the actions that led to them. This approach helps clarify the learning signals and improves the agent’s ability to attribute outcomes to specific behaviors accurately.

Can delayed rewards be found in real-world applications?

Yes, delayed rewards are prevalent in many sectors such as autonomous driving, where the effectiveness of navigational decisions unfolds over time, and healthcare management, where the impact of treatment choices affects long-term patient health.