In the realm of computational problem solving, heuristic optimization stands out for its efficiency in handling complex, often NP-hard problems. Recently, the integration of reinforcement learning (RL) with traditional heuristic methods has opened up new avenues for enhancing these techniques, leading to the development of Reinforcement Learning Driven Heuristic Optimization (RLHO). This approach leverages the adaptive learning capabilities of RL to significantly improve the initialization and execution processes of heuristic algorithms.

How RLHO Enhances Heuristic Methods

Improved Initial Solutions

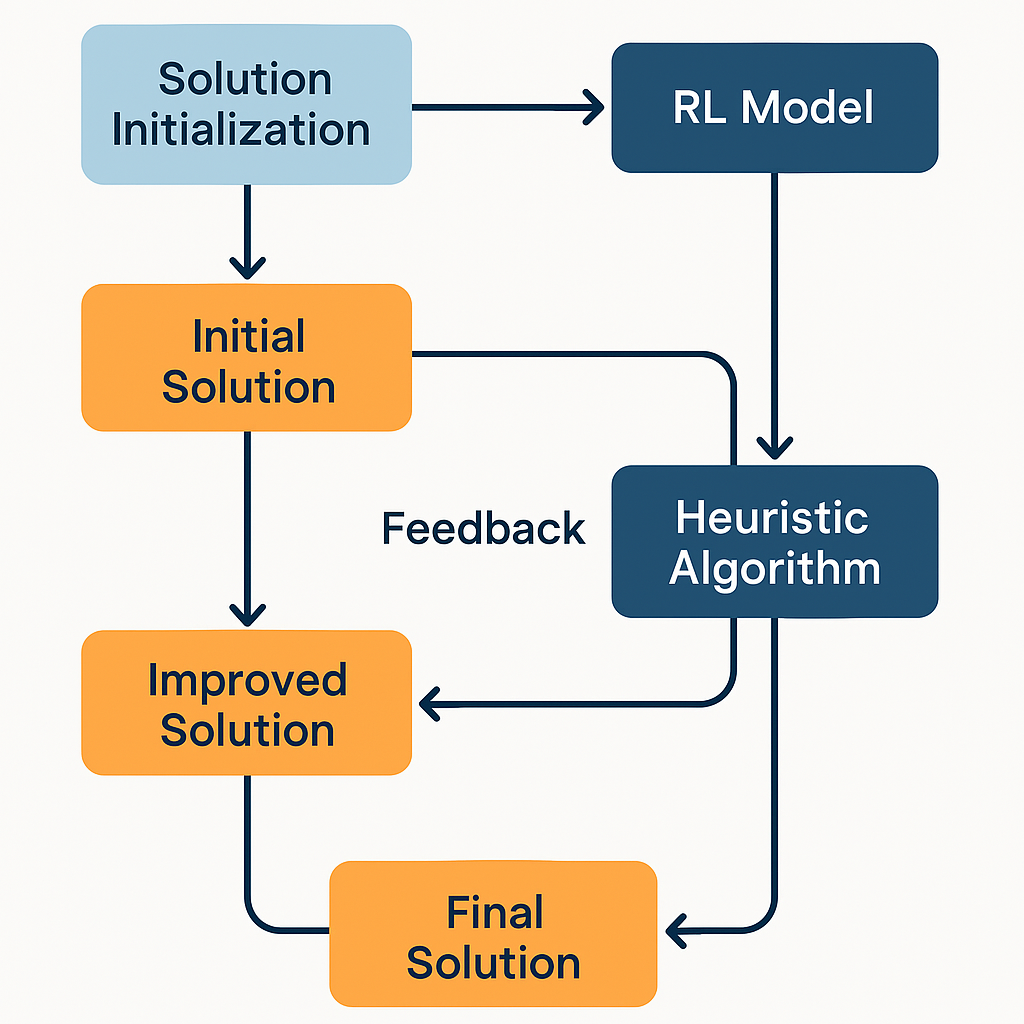

The core idea behind RLHO is to utilize RL to generate high-quality initial solutions that heuristic algorithms can then refine. Unlike random or naive starting points, RL-driven initializations are informed by previous interactions and learning episodes, making them more likely to be near optimal solutions. This approach not only speeds up the heuristic optimization process but also increases the likelihood of finding the best possible solutions.

Dual Learning Mechanism

RLHO employs a dual feedback system where the RL model is trained using both immediate and long-term rewards:

- Immediate Reward: This is typically the improvement in solution quality from one iteration to the next, encouraging the RL agent to make decisions that lead to direct enhancements.

- Long-term Reward: Provided by the performance of the heuristic optimization (HO) post-RL initialization, this feedback helps the RL algorithm understand and integrate the effects of its actions on the final outcomes of the heuristic process.

Integration with Existing Heuristic Algorithms

One of the key strengths of RLHO is its compatibility with established heuristic methods like Simulated Annealing (SA) and Genetic Algorithms (GA). By starting with an RL-generated solution, these algorithms can focus their efforts more effectively, bypassing less promising regions of the solution space. This integration has shown significant improvements in efficiency and effectiveness in solving problems like the bin packing problem, a classic combinatorial optimization challenge.

Case Studies and Performance Evaluation

In practical applications, RLHO has been tested against traditional methods using benchmarks like the bin packing problem, where the goal is to minimize the number of bins used to pack a given set of items. Studies have shown that RLHO not only outperforms basic heuristic approaches but also adapts more effectively to changes in problem parameters, such as increases in the number of items or variations in item sizes.

Challenges and Future Directions

Despite its promising results, RLHO faces several challenges:

- Complexity of Training: The dual feedback mechanism, while effective, introduces additional complexity into the training process, requiring careful tuning and potentially extensive computational resources.

- Scalability: While effective on known benchmark problems, scaling RLHO to real-world, large-scale problems presents logistical and computational hurdles that are yet to be fully addressed.

Future research in RLHO is likely to explore more sophisticated RL models and their integration with a broader array of heuristic algorithms. Improvements in training efficiency and scalability are also critical areas of focus that will determine the practical viability of RLHO in industrial and commercial applications.

Conclusion

Reinforcement Learning Driven Heuristic Optimization represents a significant advancement in the field of computational optimization. By effectively combining the exploratory power of reinforcement learning with the efficiency of heuristic methods, RLHO opens up new possibilities for solving some of the most challenging problems in computer science and operations research. As this field continues to evolve, it promises to offer more robust, efficient, and adaptable solutions across a range of industries and applications.

What is Reinforcement Learning Driven Heuristic Optimization (RLHO)?

RLHO is an approach that combines reinforcement learning (RL) with traditional heuristic optimization techniques. It leverages the adaptive learning capabilities of RL to generate better initial solutions for heuristic algorithms, significantly improving their performance and efficiency.

How does RLHO improve heuristic methods?

RLHO enhances heuristic methods by providing high-quality initial solutions that are closer to optimal. This improves the overall effectiveness of the heuristic optimization process, as the algorithms can start from a better position and thus, require fewer iterations to find an optimal or near-optimal solution.

What are the key components of RLHO?

RLHO consists of two main components: a reinforcement learning model that generates initial solutions and a heuristic algorithm that refines these solutions. The RL model is trained using a dual feedback system that includes immediate rewards for solution improvement and long-term rewards based on the heuristic’s performance.

Can RLHO be integrated with any heuristic algorithm?

Yes, RLHO is versatile and can be integrated with various heuristic algorithms, including Simulated Annealing (SA) and Genetic Algorithms (GA). This integration allows for flexible adaptation to different types of optimization problems.

What challenges does RLHO face?

The main challenges include the complexity of training the RL model, especially managing the dual feedback system, and the scalability of the approach to large-scale, real-world problems. Additionally, finding the right balance between the RL model and the heuristic algorithm is crucial for maximizing performance.