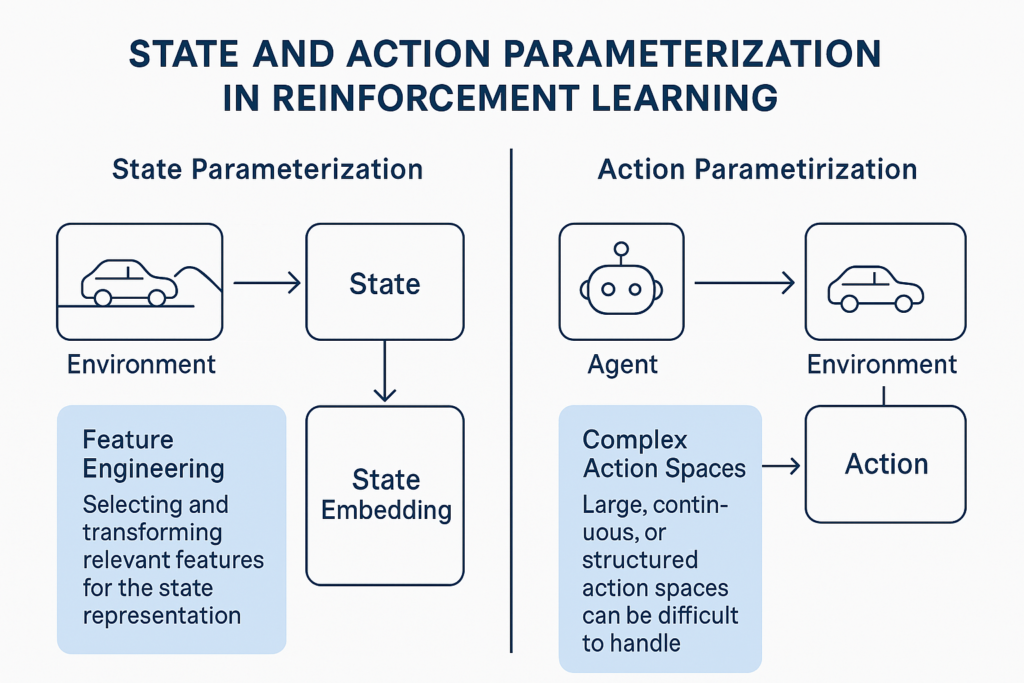

Reinforcement Learning (RL) is a powerful branch of artificial intelligence that focuses on teaching agents to make decisions by interacting with their environment. The effectiveness of an RL agent heavily relies on how well it can interpret its environment and actions. This is where the concepts of state and action parametrization play a pivotal role. State parametrization defines how an agent perceives its environment, while action parametrization determines the set of actions the agent can take.

State Parametrization in Reinforcement Learning

Importance of State Representation

State parametrization is critical because it translates the vast complexities of the environment into a structured format that the RL agent can understand and process. This parametrization serves as the foundation for all decision-making processes in RL. An optimally parametrized state can significantly enhance the learning speed and performance of the agent by highlighting the most relevant information and omitting redundant data.

Techniques for Effective State Representation

- Feature Engineering: This involves identifying and selecting the most informative and relevant attributes of the environment that impact the agent’s decision-making process. Feature engineering is crucial for improving the agent’s perception and interaction with the environment.

- Dimensionality Reduction: Techniques such as PCA (Principal Component Analysis) are employed to reduce the number of random variables under consideration, by obtaining a set of principal variables. This simplification is vital in complex environments where the state space can become overwhelmingly large.

- State Embedding: Similar to dimensionality reduction, state embedding involves transforming the original state representations into a lower-dimensional space, often using neural networks. This is particularly useful in environments where states are naturally high-dimensional, like images or sensor data.

Action Parametrization in Reinforcement Learning

Defining Action Space

Action parametrization describes the actions available to the agent. In simpler RL tasks, this might consist of a few discrete actions, but in more complex scenarios, the action space can include a multitude of actions characterized by continuous variables or even a combination of both.

Challenges of Parameterized Actions

In environments with complex actions, such as those requiring precise control or manipulation, defining an action space that allows the agent to perform effectively while maintaining computational efficiency is challenging. Actions may need to be parameterized with additional variables to adequately describe all possible actions the agent can take, like the angle and strength of a robot’s joint movement.

Integration of State and Action Parametrization

The interplay between state and action parametrization is what allows RL agents to function effectively. The state representation provides the context or the ‘scenario’ while the action parametrization offers the ‘responses’ or ‘moves’ available to the agent. Both need to be optimally designed and aligned to ensure that the agent learns efficiently and performs effectively.

Example: Deep Reinforcement Learning in Parameterized Action Space

A notable application of sophisticated action parametrization is in simulated environments like RoboCup soccer, where each action (like passing or shooting) can have various parameters (direction, speed). Researchers have employed deep neural networks to manage these parameterized actions, enabling agents to perform complex behaviors that are both effective and adaptable to dynamic game situations.

Conclusion

Effective parametrization in reinforcement learning is pivotal for developing intelligent agents capable of navigating and acting in complex environments. As the field progresses, the integration of advanced machine learning models, particularly deep learning, into state and action parametrization, continues to push the boundaries of what is possible in artificial intelligence. This progression not only enhances the capability of RL agents but also broadens the scope of their applicability to real-world tasks, making them more precise, adaptable, and efficient.

What is state parametrization in reinforcement learning?

State parametrization involves defining how an RL agent perceives its environment. It translates real-world scenarios into a structured format that the agent can understand, focusing on extracting and representing only the most relevant information for decision-making.

Why is action parametrization important in reinforcement learning?

Action parametrization determines the set of possible actions an agent can choose from in a given state. In complex environments, actions may need to be described by continuous variables or a combination of discrete and continuous parameters, allowing for precise control and nuanced agent behaviors.

How do feature engineering and state embedding improve state parametrization?

Feature engineering involves selecting the most informative and relevant features of an environment, which enhances the agent’s perception and decision-making. State embedding, often achieved through techniques like autoencoders, compresses high-dimensional data into a lower-dimensional space, making the state representation more manageable.

What challenges arise with parameterized action spaces in reinforcement learning?

Parameterized action spaces, especially those involving continuous or multi-dimensional actions, increase the complexity of the learning process. They require sophisticated algorithms that can handle the high dimensionality and ensure efficient learning and decision-making.

Can you give an example of an application of state and action parametrization?

An example is the simulated RoboCup soccer, where agents perform actions like passing or shooting, each parameterized by variables such as direction and speed. This requires advanced action parametrization techniques to allow agents to execute complex, dynamic strategies effectively.