In the evolving landscape of computational problem-solving, Reinforcement Learning Driven Heuristic Optimization (RLHO) presents a groundbreaking approach that merges the adaptive capabilities of reinforcement learning (RL) with the efficiency of heuristic methods. This hybrid technique is designed to tackle complex optimization problems more effectively by utilizing the strengths of both realms, promising substantial improvements in both solution quality and processing times.

Understanding RLHO

Conceptual Framework

RLHO fundamentally transforms the traditional heuristic optimization process by introducing a layer of machine learning that anticipates and enhances heuristic decision-making. Reinforcement learning, known for its ability to learn optimal actions through trial and error, provides heuristic algorithms with better starting points or pathways, thereby reducing the time and computational resources required to reach optimal solutions.

How RLHO Works

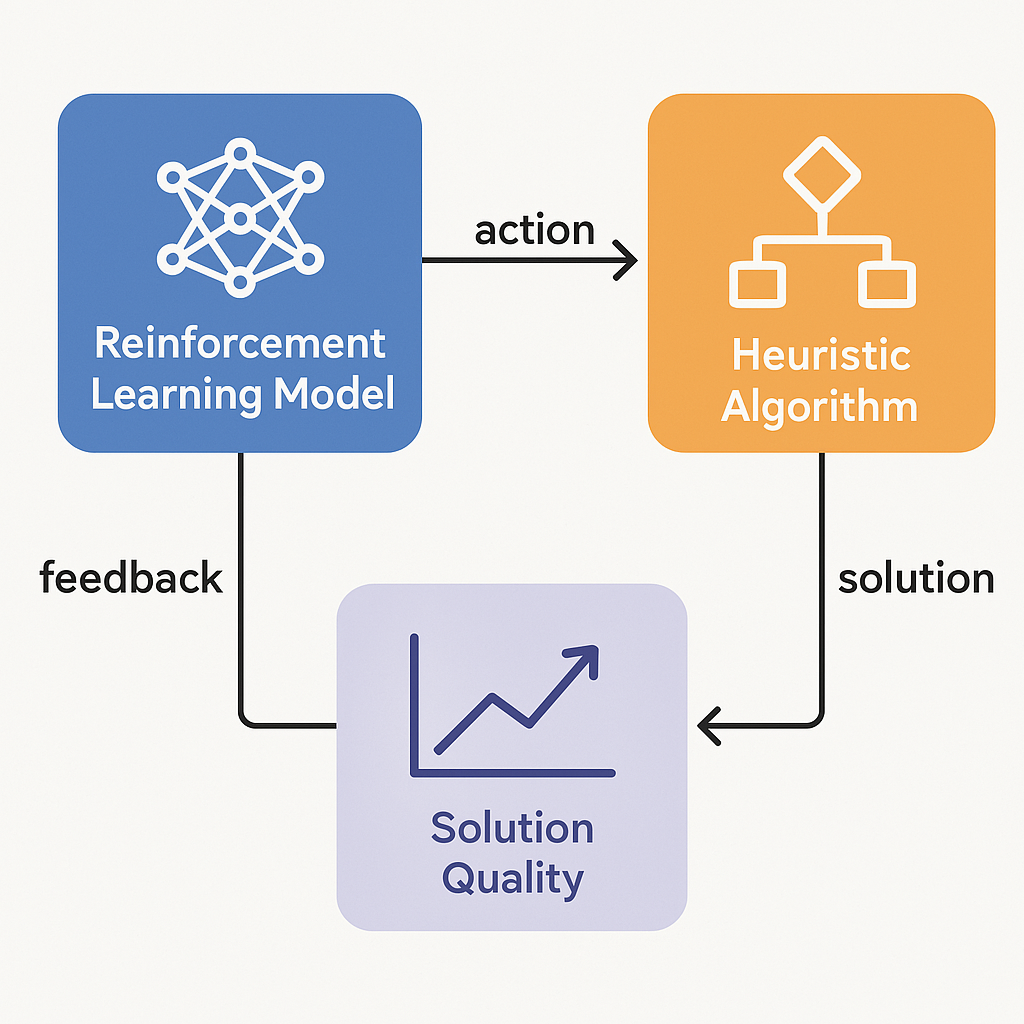

The process starts with an RL agent learning to predict initial conditions or strategies that are likely to yield better outcomes when processed by a heuristic algorithm. The RL agent receives feedback based on the performance of the heuristic solution, adjusting its strategy continuously to improve future results. This feedback loop creates a dynamic system where both components—RL and heuristic optimization—benefit and evolve from their interaction.

Key Advantages of RLHO

- Enhanced Efficiency: By integrating RL, heuristic algorithms can bypass less promising regions of the search space, focusing computational efforts on areas more likely to yield fruitful results. This targeted approach significantly speeds up the optimization process.

- Improved Solution Quality: RLHO is particularly adept at handling complex scenarios where traditional heuristics might struggle or require too much time to find viable solutions. The RL component continuously refines its predictions, leading to consistently better initializations for the heuristic processesar5iv.org.

- Adaptability: This method is highly adaptable to various types of optimization problems, from scheduling and routing to resource allocation and beyond. The flexibility to learn from a wide range of scenarios makes RLHO a versatile tool in the arsenal of optimization techniquesar5iv.org.

Applications and Implications

The potential applications of RLHO are vast, impacting industries like manufacturing, logistics, and finance, where optimization plays a critical role in operational efficiency. For instance, in warehouse logistics, RLHO can optimize the placement and retrieval paths, minimizing time and reducing the workload on human operators. Similarly, in finance, it can optimize trading strategies by simulating and learning from different market conditions.

Challenges and Future Directions

Despite its promising advantages, RLHO faces several challenges that need addressing:

- Complexity in Implementation: The integration of two sophisticated systems (RL and heuristic methods) adds layers of complexity in tuning and implementation.

- Computational Demands: Training RL models, especially in environments that simulate complex problems, requires significant computational power and resources.

- Data Dependency: The effectiveness of the RL model is heavily dependent on the quality and quantity of feedback it receives, which in turn relies on the availability of comprehensive data during training phases.

Future research in RLHO is likely to focus on enhancing the efficiency of RL models, reducing their computational demands, and improving their ability to generalize across different types of optimization tasks. Moreover, as more sophisticated RL algorithms are developed, their integration with advanced heuristic methods will likely yield even more powerful optimization tools.

Conclusion

Reinforcement Learning Driven Heuristic Optimization represents a significant innovation in the field of computational optimization, offering a smarter, more efficient pathway to solving some of the most challenging problems. As this technology matures, it promises to revolutionize the approaches we take to optimization, making processes smarter, faster, and more cost-effective. The synergy between RL and heuristic methods in RLHO not only exemplifies the potential of combining different technological domains but also sets the stage for future advancements that could transform entire industries.

What is Reinforcement Learning Driven Heuristic Optimization (RLHO)?

RLHO is a technique that combines the adaptive learning capabilities of reinforcement learning (RL) with the efficiency of traditional heuristic optimization methods to solve complex optimization problems more effectively.

How does RLHO improve heuristic optimization?

RLHO enhances heuristic optimization by providing better initial solutions and learning strategies through reinforcement learning. This leads to improved efficiency and solution quality, as the RL component continuously refines its approach based on feedback from heuristic outcomes.

What are the main benefits of RLHO?

The main benefits include increased optimization speed, higher quality of solutions, and greater adaptability to different types of optimization problems. RLHO allows heuristic algorithms to focus their efforts on more promising areas of the search space, thus saving time and resources.

Can RLHO be applied to any optimization problem?

While RLHO is particularly effective for complex and combinatorial optimization problems, its principles can be adapted to a broad range of scenarios, making it a versatile tool in fields such as logistics, manufacturing, and finance.

What challenges does RLHO face?

Key challenges include the complexity of integrating and tuning both RL and heuristic methods, the high computational demands of training RL models, and the dependency on quality data for training the RL agent.